Cooperative Neural Networks (CoNN) — An overview

Cooperative neural networks (CoNN): Exploiting prior independence structure for improved classification – this work is published in Neural Information Processing Systems (NIPS) 2018 [link]. (code) (poster_NIPS)

This is a generic technique to build Neural Network Architectures inspired by the prior knowledge available of the domain for improved performance on related classification tasks. This technique can be extended for other tasks besides classification too (not covered in this blog).

Key idea:

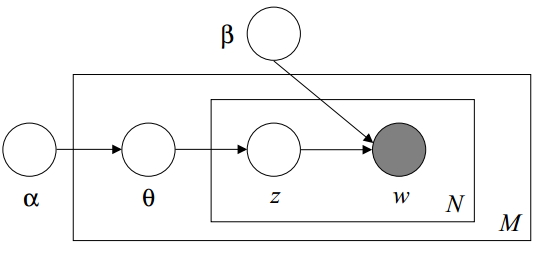

- Determine a Probabilistic Graphical Model (PGM) for the domain under consideration (for eg. Latent Dirichlet Allocation (LDA) for Document analysis, Bayesian Networks (BN) describing medical systems, Alarm monitoring system, Bayesian networks in finance domains etc.)

- Akin to Variational Inference technique (link), we take an approximate graphical model and then aim to reduce the distance between the probability distributions (KL divergence) of the true and approximate distributions. (#figures below) Here we focus on the LDA model for document analysis and our corresponding model is called CoNN-sLDA.

- Now, here comes the main contribution and insight of this work. As the solutions to the fixed-point update equations are intractable (# equation snippet), we embed them to a Hilbert Space and approximate all the operators in the corresponding Hilbert Space by Deep Neural Networks.

- Our algorithms learn these embeddings end-to-end using the target label as supervision signal. (# Algo1 & Algo2)

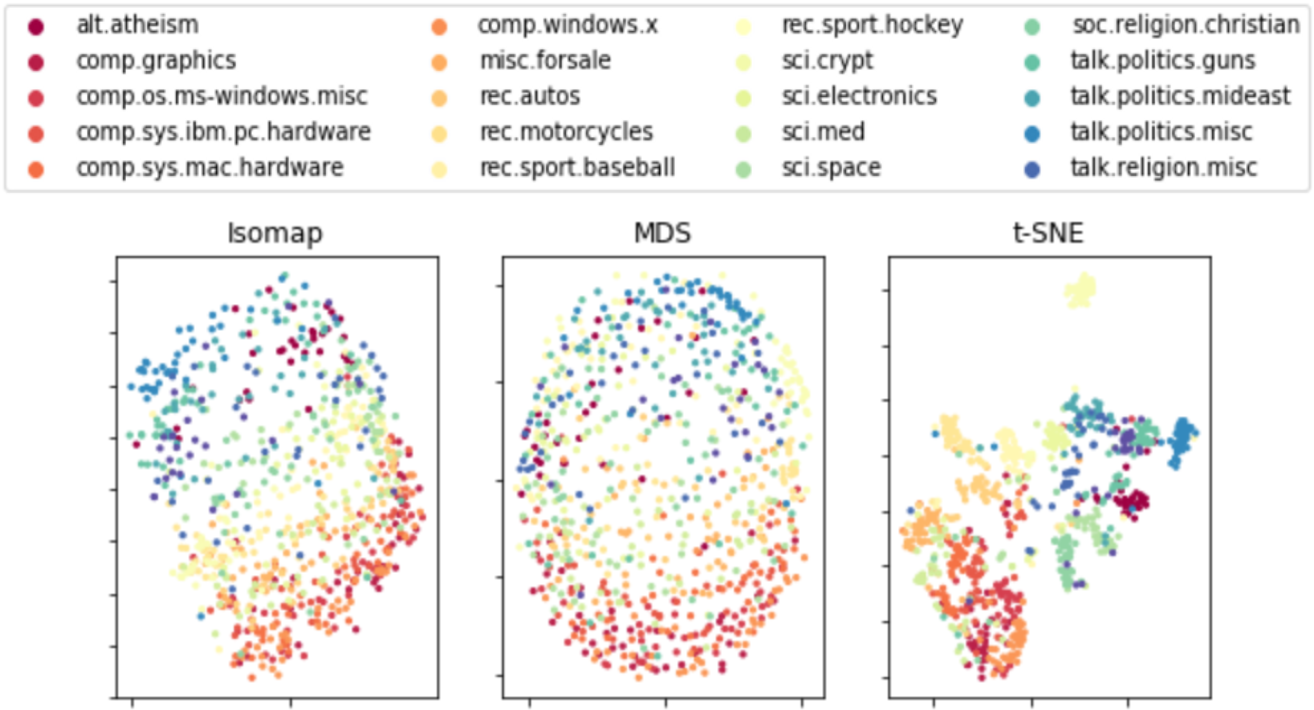

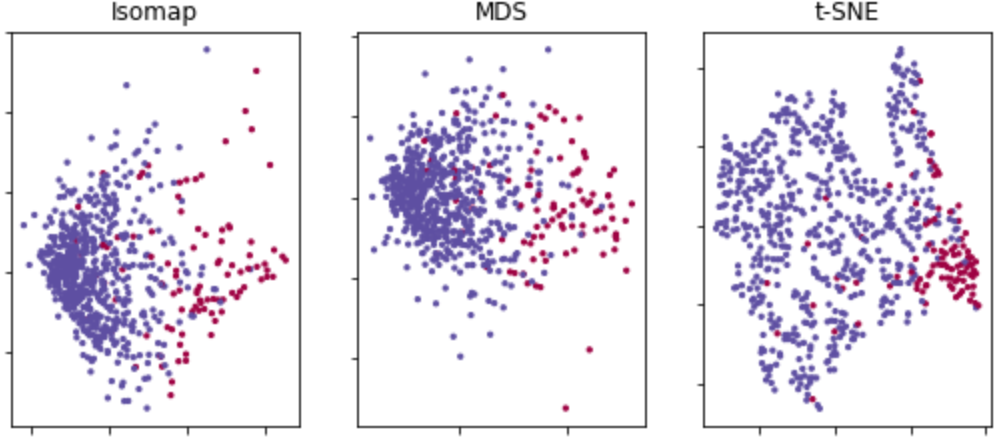

- Visualization of some results on 20-Newsgroup dataset and Multi-domain Sentimental analysis dataset.